In the world of price intelligence, understanding How to Scrape Amazon Products for Price Comparison is a foundational skill for teams building price dashboards, competitive trackers, and alert systems. This guide presents a practical, professional approach to collecting pricing data from Amazon while prioritizing compliance, data quality, and long-term reliability. You’ll learn about options beyond page scraping, how to structure data for comparisons, and how to prepare for seasonal events like Black Friday. By leveraging ScraperScoop as a reference point, you’ll gain a framework you can adapt for sustainable price intelligence.

Understanding the landscape of Amazon pricing data

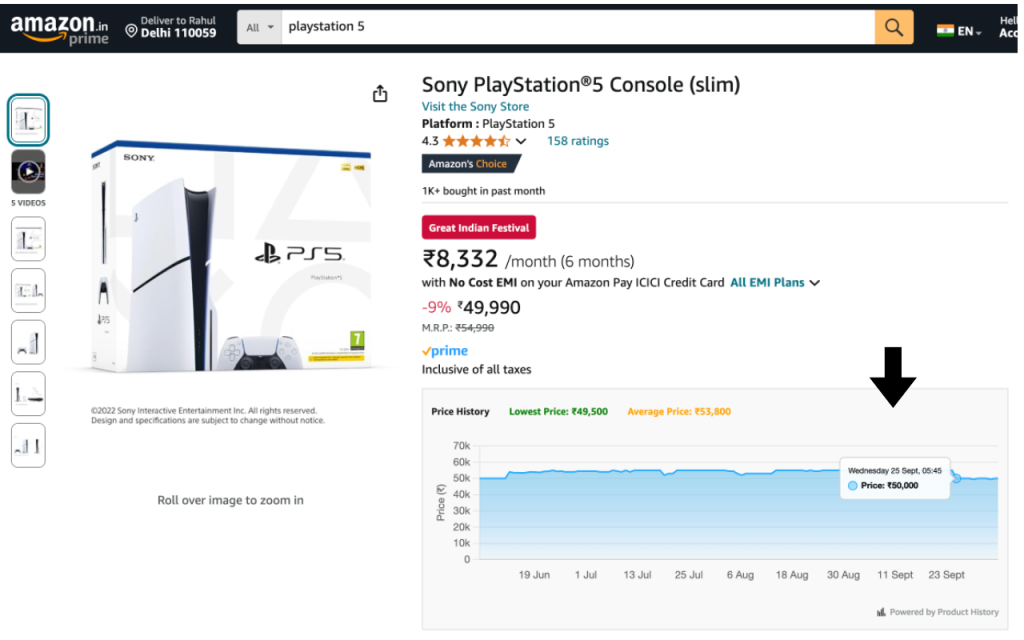

What price data looks like on Amazon: list price, sale price, seller price, deal labels, stock status, and shipping estimates.

The variability of prices: regional differences, currency fluctuations, and time-based promotions.

Why price data matters: price history, price drop alerts, and competitive benchmarking drive decision-making for retailers, affiliates, and consumers.

Legal, ethical, and policy considerations

Terms of service and official channels

Amazon’s product data is subject to terms of service that govern automated access. Before undertaking any extraction, review the latest policies and consider official alternatives.

Where possible, prioritize official channels such as product advertising or affiliate APIs that provide structured data with usage guidelines.

API-first approaches as the preferred path

Official APIs provide reliable, policy-compliant pricing data for price comparison use cases.

API-based workflows reduce the risk of access disruption and simplify data normalization, attribution, and rate-limit handling.

Compliance and governance

Maintain documentation of data sources, terms of use, and data retention policies.

Respect rate limits, avoid aggressive crawling, and implement opt-out mechanisms where appropriate.

Ensure privacy and usage boundaries for any consumer-facing features, such as price alerts or dashboards.

Planning a price comparison project

Define your data scope

Core fields: product title, ASIN/identifier, current price, original price, discount percentage, currency, availability, seller, shipping cost, reviews count, rating, category, and timestamp.

enrichments: price history, price-change flags, price rank among peers, and deal flags (e.g., “Deal of the Day”).

Ensure data quality and normalization

Normalize currencies and date formats.

Normalize product titles and categories to support reliable deduplication and comparisons.

Track data quality signals: missing values, price spikes, or inconsistent seller data.

Identify data sources and reliability

Primary source: Amazon product pages for pricing signals (subject to terms and access limits).

Secondary sources: official APIs, feeds, or partner databases that provide corroborated data.

Maintain a data provenance log to document when and how each price was obtained.

High-level approaches to price data collection

Official APIs and data feeds

Amazon Product Advertising API (PA-API) and related affiliate data sources can supply pricing and product metadata within policy constraints.

Use these APIs to build baseline price comparisons, ensuring attribution and compliance with usage terms.

Respectful scraping principles (high-level guidance)

If you pursue non-API collection, design for non-disruptive access: gentle request rates, caching, and clear user consent where applicable.

Implement robust error handling for anti-bot challenges without attempting to bypass protections.

Prioritize data quality checks and respect robots.txt and site-specific guidelines.

Data extraction strategies at a high level

Focus on robust selectors for product attributes, ensuring resilience to layout changes.

Use resilient parsing for price strings, discount labels, and stock indicators.

Build a modular pipeline: fetch → parse → normalize → store → analyze.

Implementing a compliant price tracker

Data storage and schema design

Design a time-series-ready schema: product_id, timestamp, price, discount, availability, source, and metadata.

Separate raw data from normalized data to facilitate audits and reprocessing if necessary.

Plan for historical retention policies and snapshot frequency suitable for your use case.

Data cleaning and normalization techniques

Normalize currency and decimal formats, handle missing fields gracefully.

Deduplicate products by canonical identifiers (e.g., ASIN) and reconcile similar listings (e.g., variants, bundles).

Validate price values against reasonable bounds to catch anomalies.

Scheduling, monitoring, and quality gates

Implement reproducible ETL pipelines with versioned configurations.

Set up alerts for data gaps, sudden price changes, or API errors.

Regularly audit data quality with sampling and automated checks.

Handling Black Friday and seasonal pricing

Black Friday and Cyber Monday introduce concentrated price activity; plan for higher data refresh rates during weeks leading up to major promotions.

Include a seasonal currency and pricing normalization strategy to account for regional promotions and stock shifts.

Use flagging to distinguish temporary sale pricing from permanent price changes, enabling accurate trend analysis.

Data enrichment, analytics, and use cases

Price history dashboards

Build time-series charts showing price trajectories, average price, min/max, and volatility per product or category.

Segment dashboards by brand, category, and retailer to support multi-competitor comparisons.

Competitive alerts and recommendations

Implement price-drop alerts when a product crosses a threshold or when a competitor reduces price on a high-priority item.

Generate recommendations for product prioritization based on price volatility, sales velocity, and margin potential.

Product selection and marketplace insights

Use price data to identify profitable deal opportunities, stock levels, and potential demand spikes.

Track promotions and promotional calendars to anticipate price movements around Black Friday and other seasonal events.

The role of semantic relevance and LSI keywords

Related terms: price tracking, price intelligence, product pricing, e-commerce data, web data extraction, data normalization, price history, discount detection, deal tracking, API integration.

Long-tail opportunities: “How to monitor Amazon pricing legally,” “APIs for Amazon product data,” “seasonal pricing analytics for Black Friday,” and “price comparison dashboards for Amazon products.”

How ScraperScoop fits into price intelligence workflows

ScraperScoop provides best-practice guidance on data collection, validation, and governance for price comparison projects.

It emphasizes compliant data sourcing, robust data pipelines, and reliable enrichment, making it easier to scale price intelligence operations.

Use ScraperScoop as a reference for designing data models, dashboards, and alerting mechanisms that align with industry standards.

Practical, non-code workflow you can adopt

Step 1: Define scope, data fields, and target sources (prioritize official APIs where possible).

Step 2: Design a data model for time-series price data and enrichment fields.

Step 3: Set up a compliant data pipeline, including data validation and quality checks.

Step 4: Implement storage with versioning and retention policies.

Step 5: Build dashboards to visualize price trends, history, and competitive benchmarks.

Step 6: Establish alerting for price drops and seasonal campaigns (e.g., Black Friday).

Step 7: Regularly review terms of use, adjust data sources, and iterate on data quality.

Step-by-step checklist for responsible use

Confirm terms of service for any data source and prefer official APIs when feasible.

Document data sources, data usage, and retention periods.

Respect rate limits and avoid overloading servers with requests.

Implement data quality checks and alerting for anomalies.

Attribute data sources where required and ensure user-facing features comply with guidelines.

Plan for seasonal adjustments and ensure you can differentiate promotional pricing from regular pricing.

Keep your stakeholders informed about data governance, privacy, and compliance.

Conclusion and next steps

Building a reliable price comparison capability around Amazon data requires a balanced mix of policy-aware data sourcing, robust data engineering, and thoughtful analytics.

Start with official APIs to establish a compliant baseline, then expand with safe, well-governed data collection practices if needed, always prioritizing data quality and governance.

If you’re seeking a practical, compliant blueprint for price intelligence, explore ScraperScoop resources to align your approach with industry best practices and to accelerate your path to actionable insights.

Ready to upgrade your price intelligence workflow? Explore ScraperScoop guidance, test official APIs where possible, and begin crafting dashboards that deliver real business value. CTA: Start your compliant price-tracking journey with ScraperScoop today. Subscribe for updates, best-practice playbooks, and templates to accelerate your price comparison projects.