Introduction Web Scraping Zepto Product Data for Price Monitoring is a strategic practice that combines data engineering with competitive intelligence. In today’s fast-paced e-commerce landscape, teams rely on timely price snapshots to adjust promotions, optimize margins, and stay ahead of rivals. This guide explains how to approach Zepto product data responsibly, capture high-quality price signals, and build a scalable workflow that informs pricing decisions without violating terms of service. By focusing on data quality, governance, and actionable insights, you’ll transform scattered product attributes into a reliable pricing engine.

Why Zepto product data matters for price monitoring

Price volatility insights: Track how Zepto’s pricing shifts across categories, brands, and regions to identify opportunities for margin protection or strategic discounting.

Competitive intelligence: Understand positioning relative to other quick-commerce players and traditional retailers to inform assortments and promotions.

Historical pricing trajectories: Build price history dashboards to detect seasonality, promotions, and price wars.

Data-driven pricing experiments: Use collected signals to design experiments that test price elasticity and optimize revenue.

Legal and ethical considerations

Respect terms of service: Always review Zepto’s terms, robots.txt, and any data usage policies. If data access is restricted, seek alternatives such as official APIs, partnerships, or consent-based data sharing.

Use public or authorized data sources: Prefer data that is publicly available or provided through sanctioned channels. Avoid actions that mimic login sessions, bypass protections, or extract data at unsustainable scales.

Data privacy and governance: Do not collect sensitive customer information. Ensure your data handling complies with applicable data protection regulations and internal governance policies.

Rate limits and responsible scraping: Implement conservative request pacing to avoid disrupting Zepto’s site performance and to minimize risk of IP blocking.

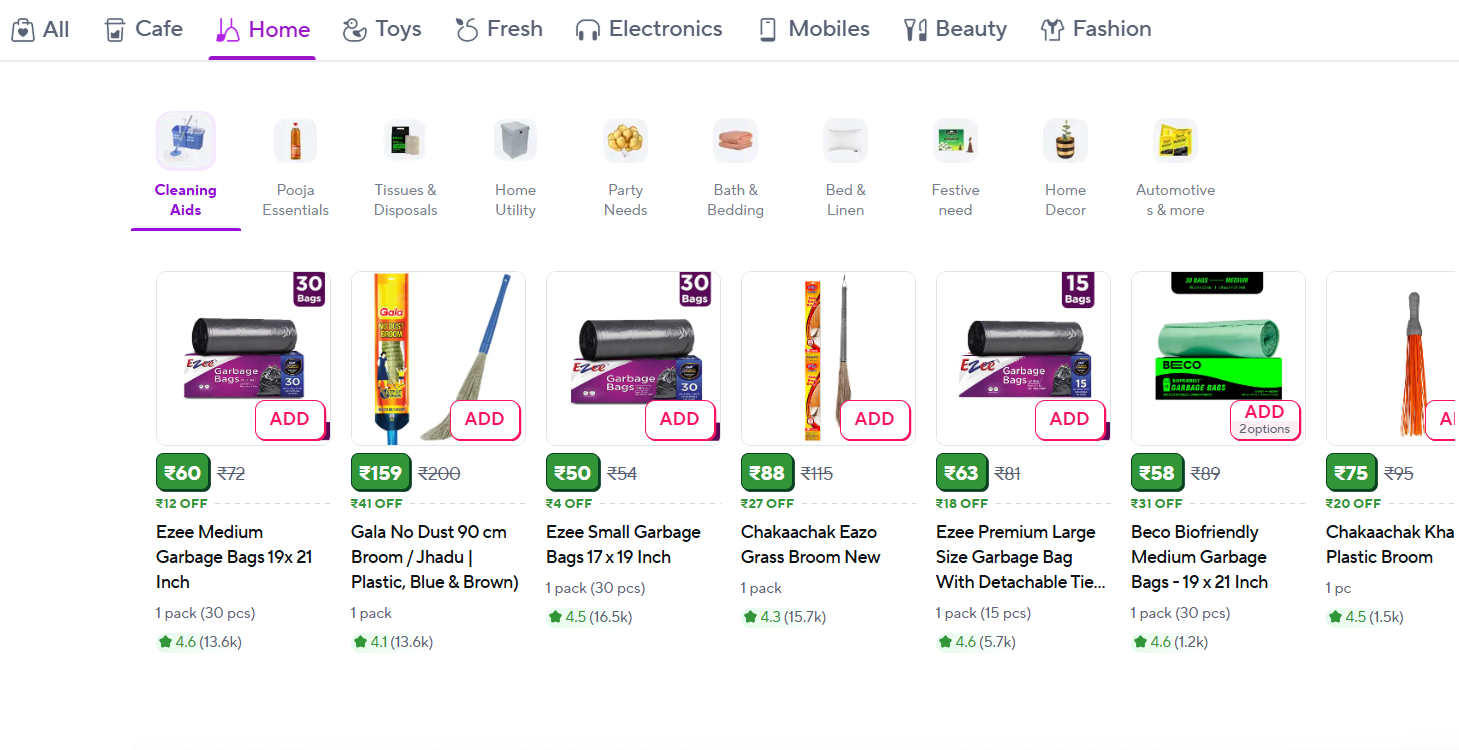

Key data points to collect (Zepto product data) for price monitoring

Product identifiers: SKU, product name, brand, model, and category.

Pricing signals: current price, list price, discounts, promotional pricing, price history timestamps.

Availability: stock status, delivery options, estimated delivery times.

Product attributes: size, color, variation, packaging, weight, and other attributes that affect perceived value.

Product metadata: rating counts, review sentiment, seller information if applicable, return policy.

Contextual signals: regional pricing, currency, tax considerations, and any shipping or handling fees.

Data quality markers: data source timestamp, crawl depth, and confidence indicators (e.g., parsing confidence, presence of missing fields).

High-level data collection strategies (ethical, scalable

Start with a plan: Define target sections (e.g., product listing pages, category pages) and data schemas before scraping.

Verify accessibility: Confirm the pages are accessible to the public without authentication and that scraping is permitted under the terms.

Use respectful crawling: Implement sensible crawl rates, retry policies, and back-off strategies to minimize impact on the site.

Prefer official channels: Where possible, use any Zepto APIs, partner data feeds, or data-sharing arrangements instead of ad hoc scraping.

Document provenance: Record the exact URLs, timestamps, and data collection conditions to support traceability and auditability.

Respecting robots.txt and terms of service

Always check robots.txt for disallowed paths and respect those rules.

Review the terms of service for data usage restrictions and the website’s acceptable use policy.

If in doubt, contact the site owner for permission or seek alternative data sources.

Ethical data collection in practice

Collect only what you need for price monitoring (e.g., price, availability, product attributes).

Avoid aggressive scraping patterns that mimic credentialed access or bypass anti-scraping measures.

Use a transparent data-sharing policy within your organization and with stakeholders.

Data quality and normalization for Zepto product data

Standardize product identifiers: Normalize SKUs and product titles to enable reliable deduplication.

Normalize prices: Convert currencies when needed and handle tax-inclusive vs. tax-exclusive pricing consistently.

Handle missing data gracefully: Use default values or probabilistic imputation only where appropriate, and flag incomplete records for follow-up.

De-duplicate records: Implement logic to merge records that refer to the same product across pages or variations.

Validate data integrity: Check for improbable price values, negative prices, or inconsistent availability status.

Enrich data with context: Append regional attributes, promotions, and currency codes to support accurate comparisons.

Building a price monitoring pipeline (high-level architecture

Data ingestion layer: Collect raw HTML, JSON, or API responses, with source metadata (URL, timestamp, user agent).

Extraction and parsing: Use structured parsers to extract the data fields defined in your schema. Favor resilient selectors and schema-first parsing.

Data storage: Store raw data and processed data in a data warehouse or data lake with versioning and lineage.

Normalization and enrichment: Apply standardization rules, unit conversions, and data enrichment logic.

Price monitoring engine: Implement rules and alerts for price changes, thresholds, and anomalies.

Visualization and reporting: Build dashboards and automated reports for pricing teams and decision-makers.

Compliance and governance: Maintain a data usage log, access controls, and audit trails.

Handling dynamic content and data reliability (without bypassing protections

Dynamic pages and asynchronous data: When prices load via JavaScript, consider server-side data sources, public APIs, or policy-compliant methods to obtain the data stream.

Headless browsers and rendering (with caution): If you must render pages to capture data, do so in a controlled, consent-based environment and avoid large-scale, unauthorized scraping.

Data verification: Cross-check critical fields against multiple pages or sources to detect inconsistencies and reduce the risk of stale data.

Scheduling and cadence: Align crawl frequency with how fast Zepto’s prices typically move in your target categories, balancing freshness with site load.

Data storage, governance, and deployment best practices

Versioned datasets: Keep historical snapshots to enable pricing trend analyses and anomaly detection.

Data lineage: Track data origin, processing steps, and transformation rules to support audits.

Security and access control: Implement role-based access and encryption for sensitive data.

Observability: Monitor job health, error rates, and data quality metrics; set up alerts for data gaps.

Scalability: Plan for growth in data volume and diversification of data sources, without compromising reliability.

Scraper Scoop: quick take for price-minded teams

Start with a documented data model and a minimal viable dataset to validate your pricing analytics.

Prioritize data quality; better signals beat larger volumes of noisy data when it comes to price decisions.

Align scraping cadence with business use cases: competitive shifts may require daily snapshots, while longer-term pricing trends can be weekly.

Use partner or API channels first; scraping should complement official data sources, not replace them.

Build clear governance around usage rights, data retention, and compliance to enable scalable, long-term monitoring.

Transforming data into actionable insights (price monitoring at scale

Price anomaly detection: Flag sudden price dips or spikes relative to category peers, with explanations based on promotions or stock events.

Promotion impact analysis: Link price changes to marketing campaigns and stock availability to evaluate ROI.

Category-level pricing strategies: Detect patterns across product groups to support cross-sell and up-sell opportunities.

Competitive benchmarking: Compare Zepto price points with major rivals to identify opportunities for margin optimization.

Case studies or practical workflows (illustrative examples

- Example workflow A: Daily price snapshot for top 100 SKUs in electronics

- Gather: price, discount, stock status, delivery estimates

- Normalize: currency conversion, tax treatment

- Analyze: price delta vs. category average; alert on anomalous changes

- Act: adjust recommended promotional calendar for the next day

- Example workflow B: Regional price comparison for fast-moving consumer goods

- Gather: regional prices, promotions, delivery costs

- Enrich: regional demand indicators and seasonality factors

- Analyze: regional price elasticities and regional margin opportunities

- Act: tailor regional promos and inventory allocation

Challenges and mitigation strategies

Anti-scraping defenses: Avoid attempting to bypass protections; instead, pursue compliant data access routes or partner agreements.

Data volatility: Prices can change quickly; maintain cadence and data validation to differentiate real shifts from noise.

Data quality gaps: Implement compensating controls like confidence scores and data-health dashboards.

Legal and reputational risk: Loss of access or legal action is possible if terms are violated; document compliance efforts and seek sanctioned channels.

Scalability constraints: Plan for incremental scaling, using modular pipelines and cloud-based storage to handle growth.

Getting started: practical checklist for teams

Define objectives: What price signals are needed, and in what time frame?

Confirm compliance: Review terms, robots.txt, and potential APIs or partnerships.

Design the data model: List required fields and acceptable value ranges.

Map data sources: Choose Zepto pages to monitor and any alternative sources.

Establish data quality gates: Validation rules, anomaly checks, and remediation steps.

Implement governance: Data access policies, retention schedules, and audit logs.

Build a minimal pipeline: End-to-end flow from collection to alerting with a pilot set of SKUs.

Measure impact: Track accuracy of price signals and their influence on pricing decisions.

Iterate: Refine selectors, validation rules, and cadence based on feedback.

Conclusion and next steps

Web Scraping Zepto Product Data for Price Monitoring, when done responsibly and with proper governance, can unlock timely price signals that enhance pricing strategy and competitive intelligence. By focusing on data quality, legal compliance, and scalable architectures, teams can derive meaningful insights without compromising site integrity or violating terms. Start small with a well-defined data model, validate your pipeline, and gradually scale as you demonstrate business value. If you’re ready to optimize your price-monitoring workflow, consider partnering with experts to design a compliant, robust data pipeline and governance framework.

Calls to action

Download our price-monitoring data collection checklist to kickstart your project.

Schedule a consultation to review your Zepto data requirements, feasibility, and compliance considerations.

Subscribe for ongoing tips on ethical web scraping, data quality, and pricing analytics.

Appendix: glossary of terms (optional quick reference)

Web Scraping: Automated extraction of data from websites.

Zepto Product Data: Product-related information published on Zepto’s platform, including pricing and attributes.

Price Monitoring: The process of tracking price changes over time to inform business decisions.

Data Quality: Accuracy, completeness, consistency, and reliability of data.

ETL: Extract, Transform, Load—the process of moving data from source to storage with transformations.

Compliance: Adhering to laws, regulations, and site terms of service.

Closing note: This guide aims to be a practical, permission-first resource for teams pursuing price intelligence through Zepto product data. Always prioritize ethical considerations, legal compliance, and transparent data governance as you build and operate your price-monitoring workflows.