An ecommerce product scraper is a tool that extracts product data from online stores to support price monitoring, catalog enrichment, and competitive analysis. In this guide from ScraperScoop, we’ll unpack what a robust ecommerce product scraper does, why it matters for modern ecommerce teams, how to choose the right solution, and best practices to maximize data quality and ROI. By the end, you’ll have a clear roadmap to deploy a scraper that delivers reliable, actionable insights across your ecommerce initiatives.

What is an ecommerce product scraper?

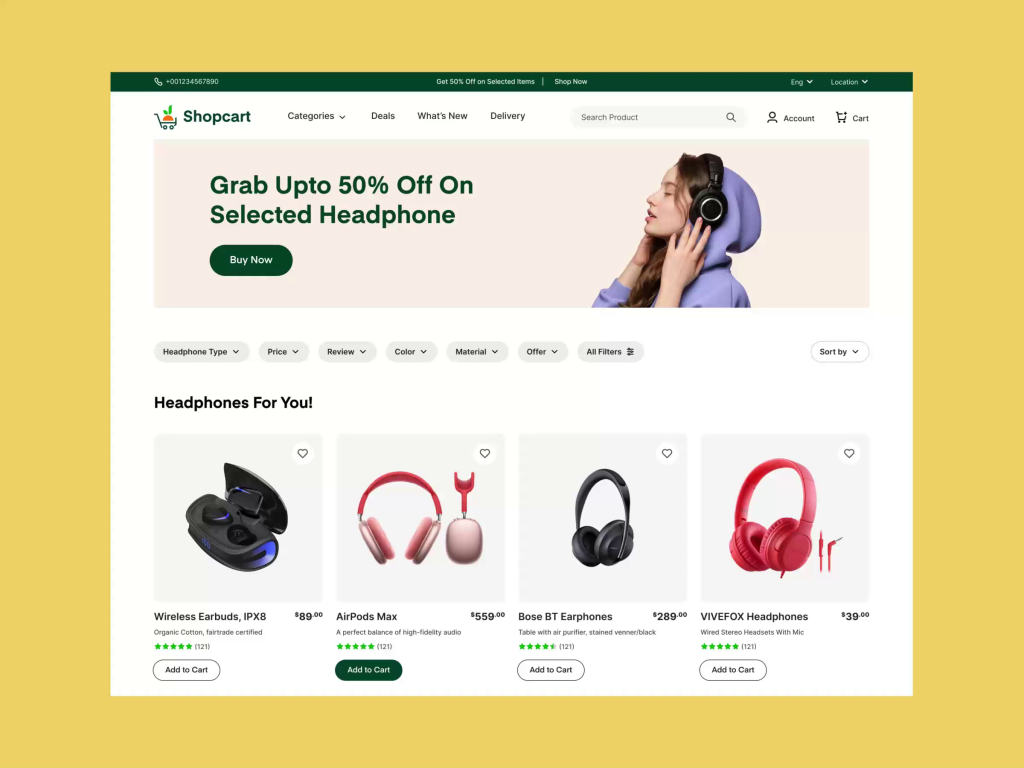

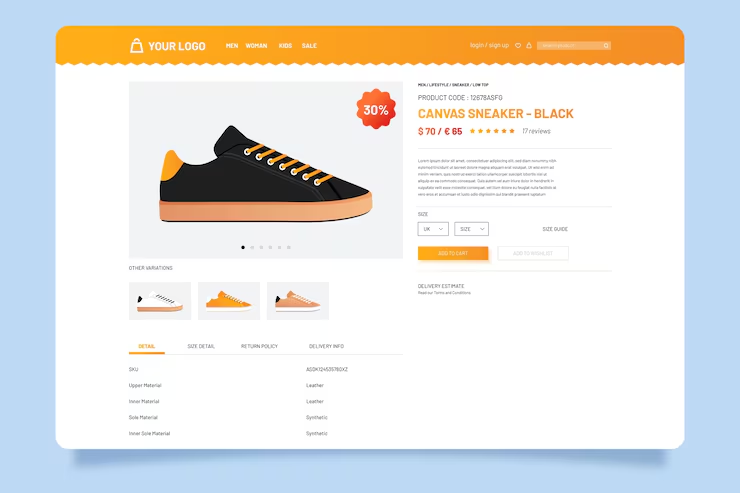

An ecommerce product scraper is software that programmatically visits retailer pages, category listings, and product detail pages to collect structured data such as titles, prices, SKUs, descriptions, specifications, stock status, images, and reviews. The primary goal is to transform disparate web data into a consistent, analyzable feed that can power price intelligence, catalog management, competitive benchmarking, and market research. In practice, a top-tier ecommerce product scraper supports scalable data extraction, robust error handling, and flexible output formats so you can feed downstream analytics platforms, data warehouses, or marketing systems. Key terms to know:

Data extraction: the process of pulling relevant product attributes from pages.

Data normalization: converting data into a common schema (e.g., currency normalization, unit standardization).

Web crawler: the component that traverses pages to discover products and categories.

Parser: the logic that converts raw HTML or API responses into structured records.

ETL/ELT: the data pipeline steps that transform and load data into storage or BI tools.

Why ecommerce teams use product scrapers

Scraping product data unlocks a wide range of strategic and operational benefits. Common use cases include:

Price monitoring and pricing intelligence: track competitor prices, promotions, and stock moves to price smarter.

Catalog enrichment: augment your own catalog with missing attributes, images, or alternate SKUs from partner sites.

Competitive benchmarking: compare assortment breadth, new product launches, and discount strategies.

Market research and demand signals: surface trends in product categories, seasonal demand, and feature preferences.

SEO and marketplace analytics: identify how competing listings describe similar products, including title and metadata patterns. To maximize impact, teams often blend data from scraping with internal data like sales history, inventory levels, and marketing campaigns, creating a fuller picture of market dynamics.

Key features of a robust ecommerce product scraper

A dependable ecommerce product scraper should deliver reliability, accuracy, and security at scale. Core features to prioritize:

Comprehensive data capture: extract essential fields such as product name, price, currency, availability, SKU, brand, category, attributes, images, ratings, reviews, seller, and shipping details.

Rich output formats and integrations: JSON, CSV, or direct API outputs, plus ready-made connectors for data warehouses, BI tools, and product information management (PIM) systems.

Scheduling and automation: run recurring scrapes on your defined cadence (hourly, daily, weekly) with automated job retries.

Anti-bot and proxy management: robust handling of bot-detection mechanisms via rotating proxies, user-agent pools, and CAPE or CAPTCHA handling where appropriate.

Dynamic content support: handle JavaScript-rendered pages via headless browsers or API endpoints when necessary.

Data normalization and deduplication: unify price formats, currencies, and attribute schemas; deduplicate similar products from multiple sources.

Quality monitoring and alerting: dashboards and alerts for data anomalies, missed scrapes, or source changes.

Compliance and governance: built-in checks for terms of service, robots.txt adherence, and rate-limiting to reduce risk.

Security and access controls: role-based access, audit trails, and secure data delivery to your pipelines.

Choosing the right ecommerce product scraper

Selecting a scraper that fits your business requires a structured evaluation. Consider the following criteria:

Reliability and scale: can the tool handle large catalogs, diverse sites, and frequent price changes without downtime?

Target coverage: does the scraper support the retailers and marketplaces most relevant to your business?

Data quality controls: how does the solution validate field formats, currency, and category mappings?

Customization and flexibility: can you tailor parsers, selectors, and workflows to your unique needs?

Compliance posture: does the vendor provide guidance on legality, robots.txt, and rate-limiting?

Integrations: seamless data delivery to your data warehouse, BI tools, or PIM systems.

Support and community: access to documentation, onboarding, and responsive support.

Total cost of ownership: consider licensing, usage tiers, proxies, and maintenance time.

Security and privacy: data handling practices, encryption in transit, and access controls. In addition, map your internal use cases to KPIs such as data completeness, freshness, and time-to-value. A good ecommerce product scraper should deliver measurable improvements in decision speed and data-driven actions.

Implementation options: build vs buy

Organizations face a fundamental choice: build a custom scraper in-house or buy a commercial solution. Both paths have merits:

Build: Pros include full control over architecture, data schemas, and feature prioritization. Cons include upfront development effort, ongoing maintenance, and in-house expertise requirements. A build approach is often favored when you have highly specialized data needs or strict data governance requirements.

Buy: Pros include faster time-to-value, ongoing updates, and access to a mature feature set (proxy management, CAPTCHA handling, robust parsers). Cons may include vendor lock-in and ongoing license costs. SaaS scrapers can scale with your needs and offer reliable uptime without heavy internal overhead. Regardless of approach, design a clean data pipeline: extraction, transformation, validation, storage, and delivery to analytics or product systems. Data pipeline essentials:

Extraction layer: modular parsers that can be swapped or extended for new sources.

Transformation layer: standardize fields, deduplicate, and enrich with third-party attributes.

Storage layer: data lake or warehouse with versioning and lineage.

Delivery layer: API endpoints, database loads, or event streams to downstream systems.

Best practices for effective scraping in ecommerce

To ensure sustainable results and minimize risk, follow these guidelines:

Respect site policies: always review robots.txt and the retailer’s terms of service. Avoid aggressive scraping that could disrupt their service.

Use compliant rate-limiting: implement adaptive throttling and politeness delays to reduce the likelihood of IP blocks.

Rotate identities: deploy rotating IPs, user-agent strings, and session management to avoid detection while staying compliant.

Handle dynamic content: leverage headless browsers or API endpoints when pages render content with JavaScript.

Manage CAPTCHAs gracefully: design workflows that minimize friction, including back-off strategies and legitimate verification paths.

Monitor changes: sites frequently update HTML structures; implement automatic changes detection and quick parser updates.

Validate data continuously: run automated checks for missing fields, invalid prices, or inconsistent categories.

Maintain data hygiene: schedule deduplication, normalization, and reconciliation across sources.

Guard sensitive data: ensure that data collection respects privacy rules and is stored securely.

Document your processes: maintain clear runbooks, data dictionaries, and change logs for audits and onboarding.

Data quality, enrichment, and normalization

High-quality data is the backbone of reliable insights. Focus on:

Price normalization: convert all prices to a single currency, account for taxes and discounts, and record price history.

Attribute mapping: normalize product attributes to a standardized schema (e.g., color, size, material) to enable cross-source comparison.

Category taxonomy: align products to a consistent category structure to simplify analysis and reporting.

Deduplication: identify duplicate products across sources using SKUs, titles, and attribute similarity.

Rich metadata: capture reviews, ratings, seller information, shipping estimates, and availability.

Validation rules: enforce data integrity checks (e.g., non-empty titles, valid URLs, proper currency codes).

Data enrichment: augment with images, alternative titles, and brand metadata to improve searchability and merchandising.

Use cases and impact: how teams win with an ecommerce product scraper

A well-implemented ecommerce product scraper delivers tangible business value across several domains:

Price intelligence: monitor competitor pricing to optimize margin and competitiveness.

Catalog enrichment: fill gaps in your own catalog, enabling richer product listings and better search results.

Competitive intelligence: understand assortment breadth, promotional tactics, and new product introductions.

Market trends: detect shifts in consumer demand and feature preferences to guide product development.

SEO and marketplace insights: optimize product pages by analyzing competing metadata and title patterns.

Inventory and supply chain insights: forecast demand signals and adjust procurement strategies accordingly.

The technical architecture in brief

A scalable ecommerce product scraper typically comprises several layered components:

Crawler layer: responsible for discovering product pages and navigating site structures.

Parser layer: extracts structured data from HTML or API responses.

Transformation layer: normalizes and enriches data to a common schema.

Storage layer: stores raw and processed data in a data lake or warehouse.

Processing layer: runs quality checks, deduplication, and aggregation; may power dashboards or alerts.

Delivery layer: exposes data to downstream systems via APIs, CSV/JSON downloads, or event streams.

Security layer: manages access, encryption, and monitoring to protect sensitive data.

Security, compliance, and risk management

As you collect data across multiple sites, consider legal and operational risks:

Terms of service: ensure your scraping activities do not breach site terms or data usage restrictions.

Intellectual property: avoid scraping protected content or copyrighted materials in ways that violate licensing.

IP reputation: improper scraping can trigger blocks that disrupt access to essential data sources.

Data privacy: safeguard any customer or end-user data encountered during scraping.

Rate-limiting and responsible use: design scrapers to operate without harming target sites or services.

Getting started with ScraperScoop

ScraperScoop offers a practical path to implementing an ecommerce product scraper without unnecessary friction. Key steps to begin:

Start with a quick assessment: map target sites, data fields, and cadence.

- Choose a data model: define the schema for products, prices, and attributes.

- Set up a prototype pipeline: build a minimal extractor for a handful of sites to validate data quality.

- Expand coverage: gradually add more sources, refining parsing rules and normalization rules.

- Integrate with your stack: connect to your data warehouse, BI tools, or PIM system.

- Monitor and optimize: establish dashboards for freshness, completeness, and anomaly detection.

- Experience a guided demo: request a ScraperScoop demonstration to see how the platform handles real-world catalog scraping, price tracking, and product-attribute normalization.

- Plan for scale: discuss licensing, proxies, and support options to accommodate growing catalogs.

Ready to accelerate your ecommerce data strategy? Request a demo of ScraperScoop today and explore tailored scrapers for your target retailers, currencies, and categories.

Conclusion and next steps

An ecommerce product scraper is a strategic asset for teams pursuing data-driven pricing, merchandising, and market intelligence. By selecting a robust solution, aligning it with a strong data pipeline, and following best practices for compliance, you can generate fresh, accurate product data that informs decisions across pricing, assortment, and growth initiatives. Combine solid data engineering with practical guardrails, and your scraper becomes a reliable engine for competitive advantage. Next steps:

Define your data goals and success metrics (data freshness, coverage, accuracy).

Audit target sources for feasibility, licensing, and change frequency.

Evaluate tools on reliability, scalability, and integration capabilities.

Start with a pilot project, then scale to full coverage with ongoing governance.

Explore how ScraperScoop can support your ecommerce and data teams with ready-made parsers, automation, and secure delivery.

Explore ScraperScoop capabilities, download our implementation playbook, or schedule a personalized tour to see how the ecommerce product scraper can fit your business needs.